Comparative Evaluations of Large Language Models for Biosecurity, Cybersecurity and Chemical Risks

Introduction

Large language models (LLMs) offer a vast array of applicability in various fields of human endeavors for both malicious and beneficial ends. In cybersecurity, for example, LLMs are applied in the detection of vulnerability, intrusion, phishing attacks and in malware analysis (Xu et al., 2024). LLMs can be used for the automation of the design, planning and execution of scientific experiments (Boiko et al., 2023). They can facilitate the navigation of chemical space, for example, for organic synthesis, autonomous drug discovery and materials design (Bran et al., 2023; Caramelli et al., 2021; Granda et al., 2018) and for the optimization of chemical reactions (Angello et al., n.d.). Similarly, in biology, LLMs and other AIs can facilitate tasks such as protein synthesis (Wu et al., 2109).

Although LLMs come with these and many more benefits, they also come with the potential risks of enabling malicious actors to cause harm at a large scale by democratizing the access to the knowledge for creating dangerous biological and chemical agents, as demonstrated in (Gopal et al., 2023). Furthermore, as shown in (Urbina et al., 2022), AIs for drug discovery could also be tweaked for the development of biochemical weapons. LLMs have enabled students who are not scientists to identify four potential pandemic pathogens, with information about how to synthesize them (Soice et al., 2023).

There is, therefore, the need for the evaluation of LLMs for dangerous capabilities, both pre and post-release, as recommended by in (The White House, 2023). This work is an evaluation of 11 open-source LLMs for hazardous knowledge of biosecurity, chemical and biosecurity topics. It seeks to answer the following questions:

How much hazardous biosecurity, chemical and biosecurity knowledge do these LLLMs have?

How do hazardous biosecurity, chemical and biosecurity knowledge scale with the size of the models?

How do the hazardous biosecurity, chemical and biosecurity knowledge correlate with the general capabilities of the models?

Evaluations

WMDP benchmark

The Weapon of Mass Destruction Proxy (WMDP) is a benchmark developed by (Li et al., 2024), consisting of 4,157 multiple-choice questions which serve as proxy measurement of biosecurity, cybersecurity and chemical hazardous knowledge. Out of these 4,157 questions, 1,520 questions are on biosecurity, 412 questions are on chemical security and 2,225 questions are on cybersecurity. Biosecurity questions cover areas such as bioweapons and bioterrorism; reverse genetics and easy editing; pathogens; viral vector research; dual-use virology and expanding access. Chemical questions cover general knowledge, synthesis, sourcing and procurement; purification, analysis and verification; development mechanisms, bypassing detection and among others. Exploitation, background knowledge, weaponization, pos-exploitation and reconnaissance are the areas covered by cyber questions.

MMLU benchmark

To compare how hazardous knowledge correlates with other natural language understanding tasks, we perform evaluations of a select LLMs against the Massive Multitask Language Understanding (MMLU) benchmark (Hendrycks et al., 2020, #).

LLMs under evaluation

The selection of the models was based on the following:

Models with comparable sizes. Models with sizes ranging from 6 billion parameters to 8 billion parameters.

Similar models with different sizes. To evaluate how these metrics scale with size of the models, Mistral AI models Mistral-7B, Mixtral-8x7B and Mixtral-8x22B; Falcon-7B and Falcon-11B were selected.

Below are below brief descriptions of the LLMs.

GPT-J-6B. GPT-J is a 6 billion-parameter language model based on generative pretraining, consisting of 28 layers with dimension of 4096, which trained using the same set of Byte-Pair Encodings as GPT-2 and GPT-3 (Wang, 2023).

Falcon-7B and Falcon-11B. Falcon-7B and Falcon-11B are decoder models of 7-billion and 11-billion parameters respectively, built by TII. Falcon-7B was trained on 1,500B tokens of RefinedWeb, while Falcon-11B was trained on over 5000B tokens and 11 languages (Almazrouei, 2023).

Mistral-7B. Mistral-7B-v0.1 is a seven- billion-parameter model based on generative pretraining using sliding window attention (SWA) (Mistral AI, 2023).

Zephyr-7B-β. Zephyr-7B-β is a version of mistralai/Mistral-7B-v0.1 fine-tuned on public synthetic datasets using Direct Preference Optimization (DPO), with 7 billion parameters (Tunstall et al., 2023).

Mixtral-8x7B and Mixtral-8x22B. Mixtral-8x7B and Mixtral-8x22B are both sparse Mixture-of-Experts (SMoE) decoder-only models. Mixtral-7B has 46.7 billion parameters, however, uses only 12.9 billion parameters per token (Mistral AI, 2023),while Mixtral-8x22B uses only 39 billion active parameters of 141 billion parameters (Mistral AI, 2024), thereby offering improvement in speed and saving cost.

MPT-7B. MPT-7B is a decoder-only transformer with 7 billion parameters, in the family of MosaicPretrainedTransformer (MPT) models, trained on one trillion English texts and codes (MosaicML NLP Team, 2023).

Bloom-7B1. Bloom-7B1 is a decoder-only model with seven billion parameters and trained on multiple languages (BigScience, 2022).

Llama-3-8B and Llama-3-70B. Llama-3-8B and Llama-3-70B are auto-regressive language pretrained language models with seven billion parameters and 70 billion parameters respectively(Meta, 2024).

Results

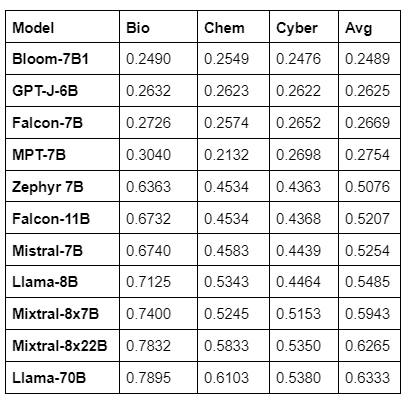

Shown in Table 1 are the evaluation results of 11 open-source LLMs for hazardous biosecurity, chemical and cybersecurity knowledge, arranged in ascending order of the average hazardous knowledge.

Table 1 - Results of the evaluation of 11 open-source LLMs for hazardous biosecurity, chemical and cybersecurity knowledge.

We observe that among the models with comparable sizes, ranging from 6 billion to 7 billion parameters, Mistral-7B has the highest score in hazardous knowledge in biosecurity, cybersecurity, and chemical security, averaging 0.5254, followed by Zephyr-7B with 0.5076. Bloom-7B1, GPT-J-6B, Falcon-7B and MPT-7B have scores below 0.3.

Figure 1 - Bar chart of the scores of 11 open-source LLMs for hazardous biosecurity, chemical and cybersecurity knowledge.

MTB-7B, Falcon-7B, GPT-J-6B, and Bloom-7B1 have scores below 0.3 in all three categories, while Llama-3-70B, Mixtral-8x22B, Mixtral-8x7B, Llama-3-8B, Mistrial-7B, Falcon-11B, and Zephyr-7B all have scores above 0.6 in biosecurity hazardous knowledge, and above 0.4 in both chemical and cybersecurity knowledge. Llama-3-70B and Mixtral-8x22B have similar scores in all three categories with Llama-3-70B scoring the highest.

We show that hazardous biosecurity, chemical and cybersecurity knowledge increases with scale, as shown in Figure 2 - which is based on Mistral-7B, Mixtral-8x7B and Mixtral-8x22B. It can be observed that the bigger the model size, the more hazardous knowledge the model has. Falcon-7B and Falcon-11B as well as Llama-8B and Llama-70B show similar patterns.

Figure 2 - The graph that shows how hazardous biosecurity, chemical and cybersecurity knowledge scales with the sizes of Mistral models - Mistral-7B, Mixtral-8x7B and Mixtral-8x22B.

The results of the evaluations of seven LLMs with six to eight billion parameters on MMLU and WMPDP benchmarks are shown in Table 2. It can be observed that the performance in MMLU tasks correlates positively with WMDP tasks, which suggests that the more capable a model is, the more hazardous knowledge it possesses.

Table 2 - Results of the evaluations of seven LLMs of six to eight billion parameters on MMLU and WMPDP benchmarks.

Figure 2 - The graph that shows how hazardous knowledge correlates with model capability in MMLU tasks

Key takeaways

The more capable models also have more hazardous knowledge. As can be seen in Figure 1, the models with more capabilities, such as Llama-70B and Mixtral-7x22B also have greater hazardous knowledge. With the quest by frontier AI developers to build systems with general intelligence capability, there is a need for caution. Meta, for example, is working towards building an open-source AGI (Koetsier, 2024). It is expected that AGI systems will have more capabilities that the current foundation models. If the pattern of the graph in Figure 2 does change through mitigation efforts, malicious actors might be able to leverage an open-source AGI to cause a far-reaching catastrophe.

It follows that future advanced language models will likely have dangerous levels of hazardous knowledge. If we extrapolate the graph in Figure 2 to models of up to 500 billion parameters or more, it is likely that the levels of hazardous knowledge will be unsafe for the models to be released publicly. This lends support to the assertion in (Hendrycks et al., 2023) that future version of AIs could empower malicious actors.

Biosecurity hazardous knowledge appears to be least mitigated against in all the models with average score of 0.4 and above. It can be observed in Table 1, for example, that biosecurity hazardous knowledge in Llama-70B is over 29% greater than chemical knowledge and over 46% greater cybersecurity knowledge. This calls for greater efforts towards mitigating biosecurity risks, without which we might have to grapple with the catastrophic consequences.

The challenge of maintaining capabilities and minimizing hazardous knowledge. Machine unlearning has been applied to reduce hazardous knowledge in large language models (Li et al., 2024). Although machine unlearning cannot completely remove hazardous knowledge (Shi et al., 2023), it is still helpful. However, it comes at a cost: while it reduces hazardous knowledge, the method at the same time reduces model capabilities. The challenge is, how do we minimize hazardous knowledge while maintaining model capabilities that are beneficial?

Acknowledgement

This work was supported by BlueDot Impact.

References

Almazrouei, E. (2023, June 20). tiiuae/falcon-7b · Hugging Face. Hugging Face. Retrieved June 7, 2024, from https://huggingface.co/tiiuae/falcon-7b

ANGELLO, N. H., RATHORE, V., BEKER, W., WOŁOS, A., & BURKE, M. D. (n.d.). Closed-loop optimization of general reaction conditions for heteroaryl Suzuki-Miyaura coupling.

BigScience. (2022, May 26). bigscience/bloom-7b1 · Hugging Face. Hugging Face. Retrieved June 7, 2024, from https://huggingface.co/bigscience/bloom-7b1

bigscience/bloom-7b1 · Hugging Face. (2022, May 26). Hugging Face. Retrieved June 6, 2024, from https://huggingface.co/bigscience/bloom-7b1

Boiko, D., MacKnight, R., Kline, B., & Gomes, G. (2023, December). Autonomous chemical research with large language models.

Boiko, D. A., MacKnight, R., & Gomes, G. (2023). Emergent autonomous scientific research capabilities of large language models. https://arxiv.org/abs/2304.05332

Bran, A. M., Cox, S., Schilter, O., Baldassari, C., White, A. D., & Schwaller, P. (2023). ChemCrow: Augmenting large-language models with chemistry tools. https://arxiv.org/abs/2304.05376

Bran, A. M., Cox, S., Schilter, O., Baldassari, C., White, A. D., & Schwaller, P. (2023, October). ChemCrow: Augmenting large-language models with chemistry tools. https://arxiv.org/pdf/2107.03374

Caramelli, D., Granda,, J. M., & Mehr,, H. M. (2021). Discovering New Chemistry with an Autonomous Robotic Platform Driven by a Reactivity-Seeking Neural Network.

Gennari, J., Lau, S., Perl, S., Parish, J., & Sastry, G. (2024). CONSIDERATIONS FOR EVALUATING LARGE LANGUAGE MODELS FOR CYBERSECURITY TASKS.

Gopal, A., Helm-Burger, N., Justen, L., Soice, E. H., Tzeng, T., & Jeyapragasan, G. (2023, October 25). Will releasing the weights of future large language models grant widespread access to pandemic agents?

Granda, J. M., Donina, L., Dragone, V., Long, D.-L., & Cronin, L. (2018). Controlling an organic synthesis robot with machine learning to search for new reactivity.

Hendrycks, D., Mazeika, M., & Woodside, T. (2023). An Overview of Catastrophic AI Risks. https://arxiv.org/pdf/2306.12001

Jumper, J., Evans, R., & Pritzel, A. (2021). Highly accurate protein structure prediction with AlphaFold. https://www.nature.com/articles/s41586-021-03819-2

Koetsier, J. (2024, January 18). Meta To Build Open-Source Artificial General Intelligence For All, Zuckerberg Says. Forbes. Retrieved June 10, 2024, from https://www.forbes.com/sites/johnkoetsier/2024/01/18/zuckerberg-on-ai-meta-building-agi-for-everyone-and-open-sourcing-it/

Li, N., Pan, A., Gopal, A., Yue, S., & Berrios, D. (2024). The WMDP Benchmark: Measuring and Reducing Malicious Use With Unlearning. arXiv.

Meta. (2024, April 18). meta-llama/Meta-Llama-3-8B · Hugging Face. Hugging Face. Retrieved June 6, 2024, from https://huggingface.co/meta-llama/Meta-Llama-3-8B

Mistral AI. (2023, September 27). Mistral 7B. Mistral AI. Retrieved June 6, 2024, from https://mistral.ai/news/announcing-mistral-7b/

Mistral AI. (2023, December). Wikipedia, the free encyclopedia. Retrieved June 6, 2024, from https://mistral.ai/news/mixtral-of-experts/

Mistral AI. (2024, April 17). Cheaper, Better, Faster, Stronger. Mistral AI. Retrieved June 6, 2024, from https://mistral.ai/news/mixtral-8x22b/

MosaicML NLP Team. (2023, May 5). mosaicml/mpt-7b · Hugging Face. Hugging Face. Retrieved June 7, 2024, from https://huggingface.co/mosaicml/mpt-7b

Sandbrink, J. B. (2023). Artificial intelligence and biological misuse: Differentiating risks of language models and biological design tools.

Shi, W., Ajith, A., Xia, M., Huang, Y., & Liu, D. (2023, October). Detecting Pretraining Data from Large Language Models.

Soice, E. H., Rocha, R., Cordova, K., Specter, M., & Esvelt, K. M. (2023). Can large language models democratize access to dual-use biotechnology? https://arxiv.org/abs/2306.03809

Tunstall, L., Beeching, E., & Lambert, N. (2023). Zephyr: Direct Distillation of LM Alignment. Hugging Face. Retrieved June 7, 2024, from https://huggingface.co/HuggingFaceH4/zephyr-7b-beta

Urbina, F., Lentzos, F., Invernizzi, C., & Ekins, S. (2022). Dual Use of Artificial Intelligence-powered Drug Discovery.

Wang, B. (2023, May 3). EleutherAI/gpt-j-6b · Hugging Face. Hugging Face. Retrieved June 7, 2024, from https://huggingface.co/EleutherAI/gpt-j-6b

THE WHITE HOUSE. (2023). Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence. https://www.whitehouse.gov/briefing-room/presidential-actions/2023/10/30/executive-order-on-the-safe-secure-and-trustworthy-development-and-use-of-artificial-intelligence/

Wu, Z., Kan, S. B. J., Lewis, R. D., & Arnold, F. H. (2109). Machine learning-assisted directed protein evolution with combinatorial libraries.

Xu, H., Wang, S., Li, N., Wang, K., Zhao, Y., Chen, K., Yu, T., Liu, Y., & Wang, H. (2024, May). Large Language Models for Cyber Security: A Systematic Literature Review.